Shifting Performance to the Mainstream

Nowadays, there is a growing understanding among customers and project owners on the need for performance testing as part of an application development lifecycle. Still, performance testing is an informal and unknown discipline. Development teams can become too focused on delivering the functionality of the application, neglecting non-functional requirements, and ultimately lacking an effective overall automation test strategy and infrastructure.

Let us first see what performance testing is from a user’s perspective. So, when you talk to an end-user regarding the performance of an application, then the only answer we get is,

“If I can carry out the actions without any delay or hazard then the performance is good.”

Now, with this perspective, if we need to do a performance test, then how will you conduct it?

Naïve Knowledge Around Performance Test:

I was engaged in performance testing of an application and whenever I had a conversation with customers about their experience in doing performance testing, this is how they responded:

“We have invited 100+ people to lunch and invited them to gather around in a conference room. Then we handed them the instructions to perform user actions on the application under test. Then, we strategically checked the response time with stopwatch.”

Now, we were able to notice the approach customers took here. They knew the importance of performance tests, but did not know how to set it up. The above approach is challenged because correlating response times from 100 separate individuals would be difficult as thinking and action time may vary individually.

As simple as conducting a performance test may seem, there are a variety of thoughts on the process. Within this variety, there is often a failure of an application to deliver an acceptable level of performance desired by the user base, so we must ask the question, why?

Digging out the Reason:

The main reason why many applications fail to achieve the noble aspiration of a better performance is the lack of prior testing and neglecting the future competitive environment. Usually, with an increase in the number of users, a slight dip is accepted by most business requirements. However, we need to keep a close eye on response/transaction time as an increasing number of users might also cause lock state cases to occur.

Challenges with performance aspects of a system can occur when the architecture does not take into consideration performance as one of the goals of the system. A good design lends itself towards good performance, or at least the agility to change or reconfigure an application to cope with unexpected performance challenges.

Is there a solution?

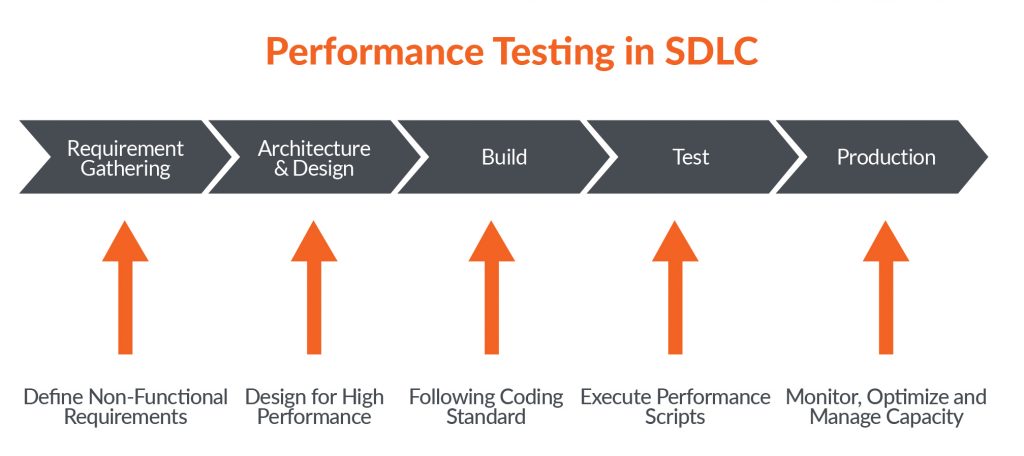

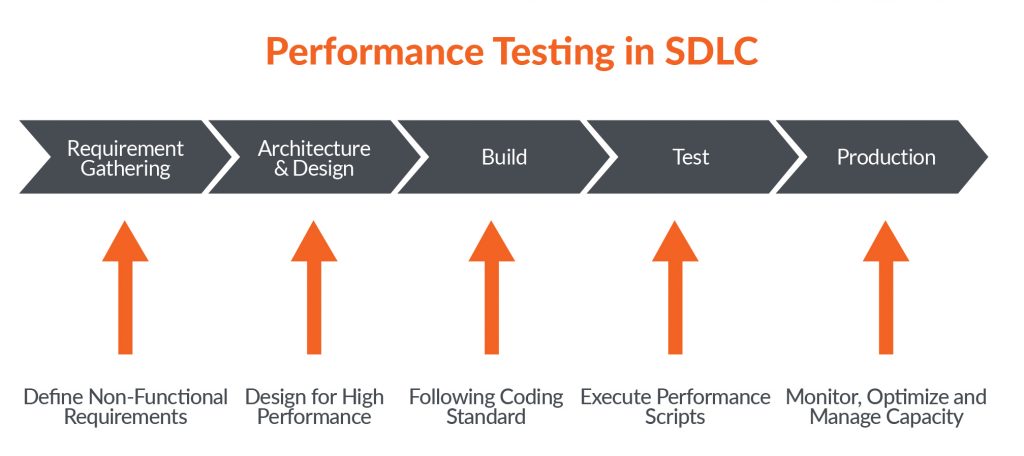

Yes, there is. Check the structure proposed below for performance testing in software development life-cycle:

Defining NFR (Nonfunctional requirement) Questionnaire:

We can create a questionnaire that we can use as a specified template with a new project and define the performance test life cycle. Let’s check a few questions:

- How many end-users will the application need to support at release, or after 6 months, 12 months, or 2 years?

- Where will these users be located (Geo-location), and how will they connect to the application?

- How many of these users will be concurrent at release, or after 6 months, 12 months, or 2 years?

- What functionality will be used the most by users? We can use this to determine which features should be performance tested.

The answers then lead to other questions, such as:

- How many and what specification of servers will we need for each application tier?

- What sort of network infrastructure do we require?

Once we get answers to these questions, then we can get the ball rolling and think about including performance tests in the early stages of SDLC.

Design & Execution:

The vital part of the design & execution of performance testing is to choose an appropriate tool. I always recommend using an automated performance test tool because it gives the flexibility to run the test on-demand and correlate performance data from various sources such as servers, networks, and application response time.

Here are my suggestions whenever you choose a performance testing tool:

- Application Technology Support: Make sure your performance testing tool supports the application technology we are building. For example, if our application deals with database queries, then the selection of tools should be based on testing database query options available in the tool.

- Scripting Support: We need to consider if the preferred testing tool requires a lot of scripting support to make the test script robust. If it is required, then do we have an adequate set of skills in our testing team?

- User-friendly Reporting: The foremost important part of performance testing is to analyze the execution results. Before selecting the tool, we need to check the reporting structure of the tool. How user-friendly is the report analysis? Can we correlate the data for a large user request load?

Test Design:

For each performance test that we create, we need to consider the following points:

- Do we have a baseline criterion? If not, then develop a test that runs a single user to a base scenario and then define the performance baseline which can be used to compare all future run results.

- Once we have established performance baselines, the load test is normally the next step. Load test is a classic performance test, which is done to determine availability, concurrency or throughput, and response time of software. Load test simulates real world scenarios, hence the customer is the best person who can provide us the user data and other relevant information that we use for test simulation.

- Define & execute isolation tests to explore any problems revealed by load testing.

- Define & execute soak test (running test for extended period of time) to reveal any memory leaks in the application.

- Play with the load balancing configuration for the application and try to find the bottlenecks concerning physical resources.

Performance Testing with Mock Services:

Mocking in Software Engineering is a concept of simulating the behavior of a real system in a controlled & systematic way.

Let us view the need for Mocking in Performance Testing:

- Mocking can be implemented when we want to start the performance test early in the design phase and the targeted services are not developed or are ready to be included in the system.

- Mocking can be useful when we want to test third party API’s but can’t do without impacting the production services. Although a third party may provide a sandbox environment (dummy APIs), it may incur extra cost or lack capacity for load testing.

Therefore, after considering the points above, we have a high importance of using mock services as it removes all constraints and enables us to invoke testing in the early phase of SDLC.

In this article, I am not going to cover how to write mock services because that is a separate topic to articulate. But as software engineers, we need to empower ourselves to use mock services in testing and also in the development phase.

When we write mock services, then it allows other team members to reuse, or leverage to create more services around it.

In performance testing, mock services can be used as a test dependency directly in our test scripts or we can use cloud tools like BlazeMeter to integrate mock services in test creation.

Having said that, if we do not start our planning with performance and stability in mind, then we are exposing our application or software to significant risks and eventually losing the business in the long term.